Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

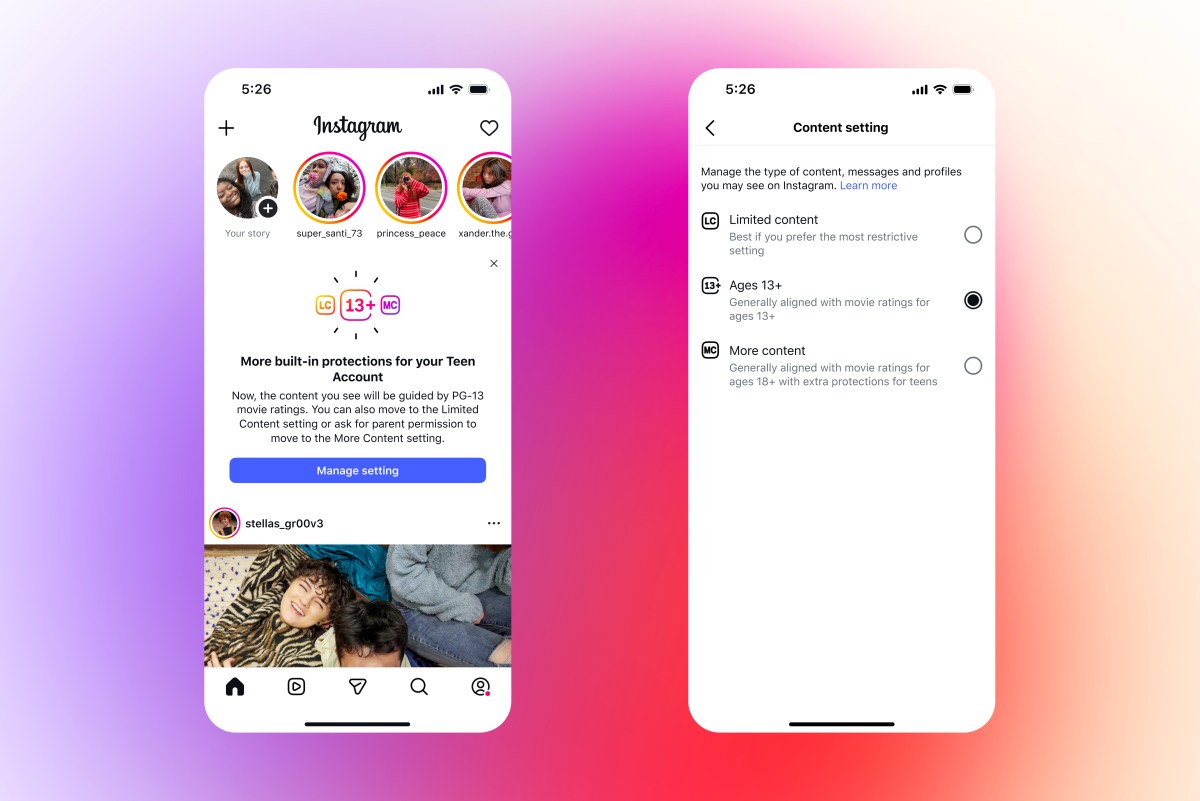

In an effort to protect its underage users from harmful content, Instagram is imposing new restrictions on teen accounts. By default, users under 18 will only watch content that adheres to PG-13 movie ratings, avoiding themes such as extreme violence, sexual nudity, and graphic drug use.

Users under the age of 18 will not be able to change this setting without explicit consent from their parents or guardians.

Instagram is also introducing a stricter content filter, called Limited Content, which will prevent teens from seeing and posting comments on posts where the setting is turned on.

The company said that starting next year, it will implement more restrictions on the types of chats teens can have with AI bots that have the limited content filter turned on. It’s already applying the new PG-13 content settings to AI conversations.

This step is what chatbot makers like OpenAI and Personality.AI They are taken to court on charges of causing harm to users. Last month, OpenAI was introduced New restrictions on ChatGPT Users are under 18, and she said she is training the chatbot to refrain from “flirty talk.” Earlier this year, Character.AI was also launched New limits and parental controls have been added.

Instagram, which has built teen safety tools across it the accounts, Direct messages, searching, and contentexpands controls and restrictions in various areas for underage users. The social media service will not allow teens to follow accounts that share age-inappropriate content, and if they follow such accounts, they will not be able to see or interact with content from those accounts, and vice versa. The company is also removing these accounts from recommendations, making them more difficult to find.

The company also prevents teens from seeing inappropriate content related to them in direct messages.

TechCrunch event

San Francisco

|

October 27-29, 2025

Meta already restricts teen accounts from Discover content related to eating disorders and self-harm. The company is now banning words like “alcohol” or “blood,” and says it’s also making sure teens don’t find content in those categories by misspelling those terms.

The company said it is testing a new way for parents to flag content that should not be recommended for teens using moderation tools. Flagged posts will be sent to the review team.

Instagram is rolling out these changes in the US, UK, Australia, and Canada starting today, and globally next year.