Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Google DeepMind launches two new models of artificial intelligence designed to help robots “perform a wide range of tasks in the real world more than ever.” The first, called Gemini Robotics, is a business model with a vision language capable of understanding new situations, even if it is not trained on it.

Gemini robots built on Gemini 2.0, The latest version of the leading artificial intelligence model from Google. During a press conference, Carolina Parada, Supreme Director and Robotian Head of Google DeepMind, said that Gemini Robotics “derives from the world of multimedia Guemini and transports it to the real world by adding physical procedures as a new way.”

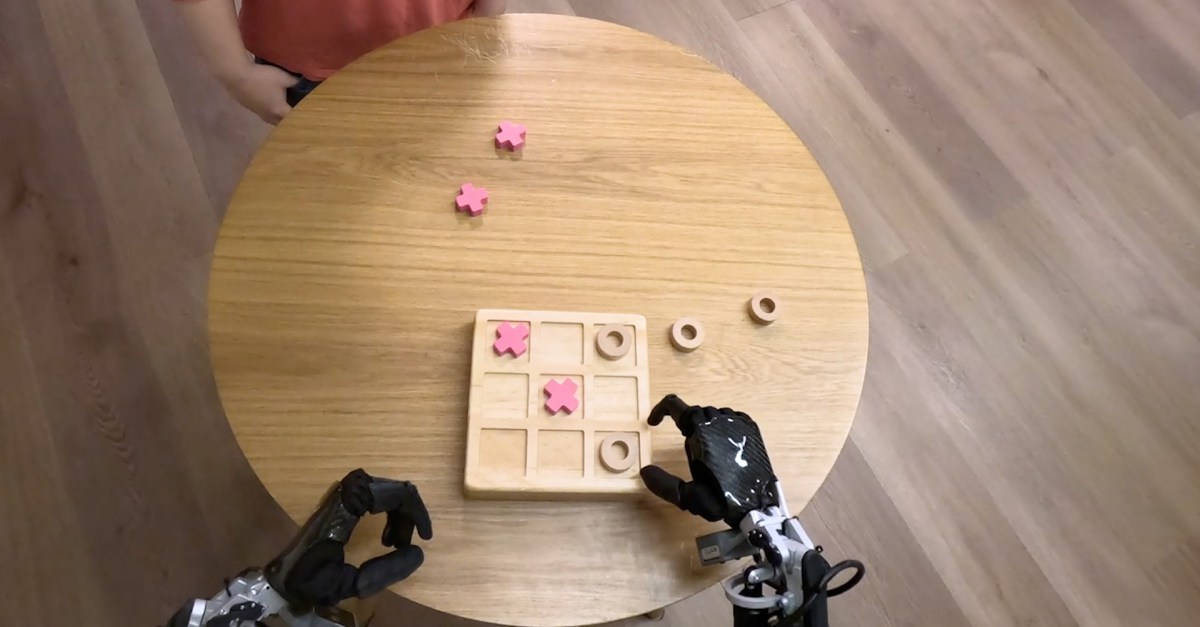

The new model offers progress in three main areas. Google Deepmind says it is necessary to build useful robots: public, interaction, and ingenuity. In addition to the ability to generalize new scenarios, Gemini robots are better in interacting with people and their environment. It is also able to perform the most accurate material tasks, such as folding a piece of paper or removing the bottle cover.

Parada said: “Although we have made progress in each of these areas individually in the past with public robots, we make the increasing performance (significantly) in all three areas with one model,” Parada said. “This enables us to build more capable robots, which are more responsive and that are more powerful for changes in their environment.”

Google Deepmind also launches Gemini Robotics-a (or embodiment), which the company describes as an advanced visual language model that can “understand our complex and dynamic world.”

Parada also explains, when you fill the lunch box and you have elements on a table in front of you, you will need to know the location of everything, as well as how to open the lunch box, how to understand the items, and where to place them. This is the type of thinking that Gemini robots are expected to do. He is designed for robotic specialists to communicate with the low-level control units-the system that controls robot movements-which allows them to enable new potential supported by Robotics-a.

Regarding safety, Google Deepmind Vikas Sindhwani told correspondents that the company is developing a “class approach”, adding that Gemini Models “robotics” trained to evaluate whether it is a safe procedure or not in a specific scenario. The company will also issue new standards and frameworks to help more safety research in the artificial intelligence industry. Last year, Google DeepMind The “Robot Constitution” presented, “ A collection of ISAAC ASIMOV rules for their robots to follow up.

Google DeepMind works with Apptronik “Building the next generation of human robots”. It also allows a “reliable test” to reach the Gemini robots model, including Greater robotsand Early movement robotsand Boston dynamicsAnd Charming tools. Parada said: “We are very focused on building intelligence, which will be able to understand the material world and be able to act in this material world,” Parada said. “We are very excited to take advantage of this through multiple models and many applications for us.”